Growth of Functions

The growth of a function is determined by the highest order term: if you add a bunch of terms, the function grows about as fast as the largest term (for large enough input values).

For example, $f(x) = x^2+1$ grows as fast as $g(x) = x^2 + 2$ and $h(x) = x^2+x+1$, because for large $x$, $x^2$ is much bigger than $1$, $2$, or $x+1$.

Similarly, constant multiples don't matter that much: $f(x)=x^2$ grows as fast as $g(x)=2x^2$ and $h(x)=100x^2$, because for large $x$, multiplying $x^2$ by a constant does not change it "too much" (at least not as much as increasing $x$).

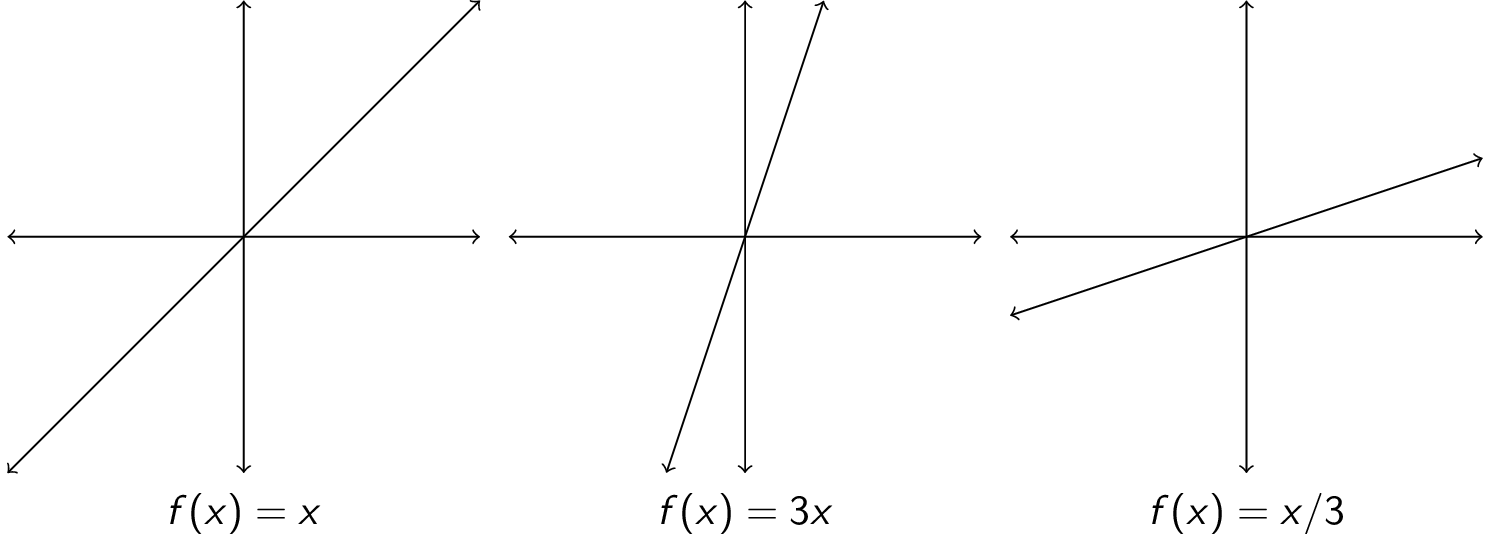

Essentially, we are concerned with the shape of the curve:

All three of these functions are lines; their exact slope/y-intercept does not matter.

Only caring about the highest order term (without constant multiples) corresponds to ignoring differences in hardware/operating system/etc. If the CPU is twice as fast, for example, the algorithm still behaves the same way, even if it executes faster.

Big-Oh Notation

Let $f$ and $g$ be functions from $\mathbb{Z} \rightarrow \mathbb{R}$ or $\mathbb{R} \rightarrow \mathbb{R}$. We say $f(x)$ is $O(g(x))$ if there are constants $c \gt 0$ and $k \gt 0$ such that $0 \le f(n) \le c \times g(n)$ for all $x \ge k$. The constants $c$ and $k$ are called witnesses. We read $f(x)$ is $O(g(x))$ as "$f(x)$ is big-Oh of $g(x)$". We write $f(x) \in O(g(x))$ or $f(x) = O(g(x))$ (though the former is more technically correct).

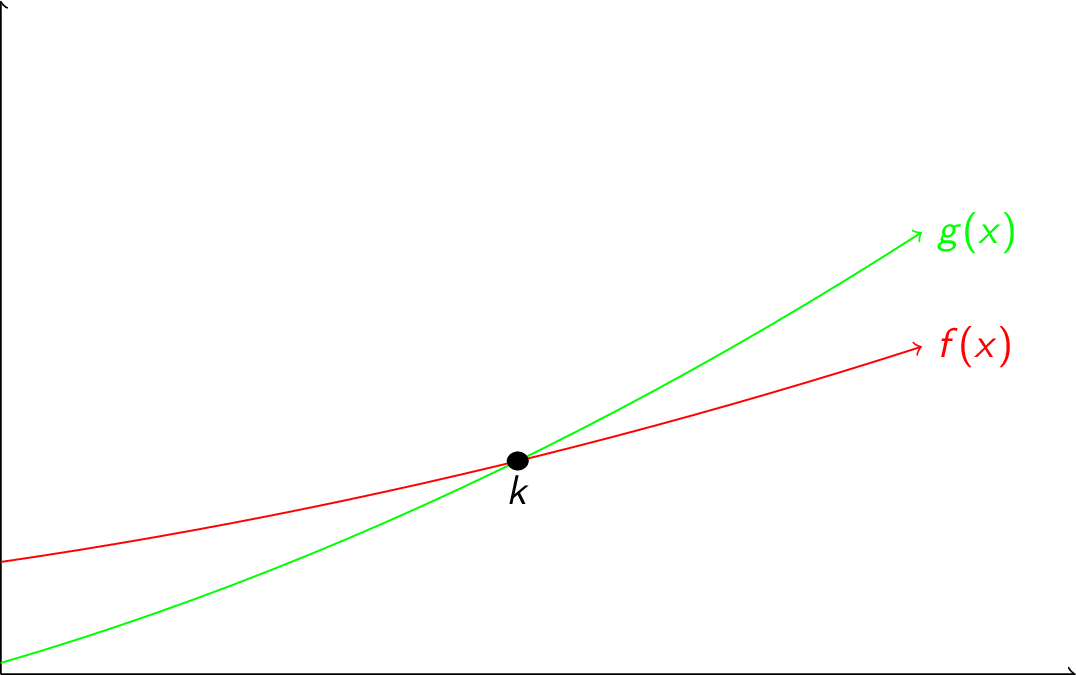

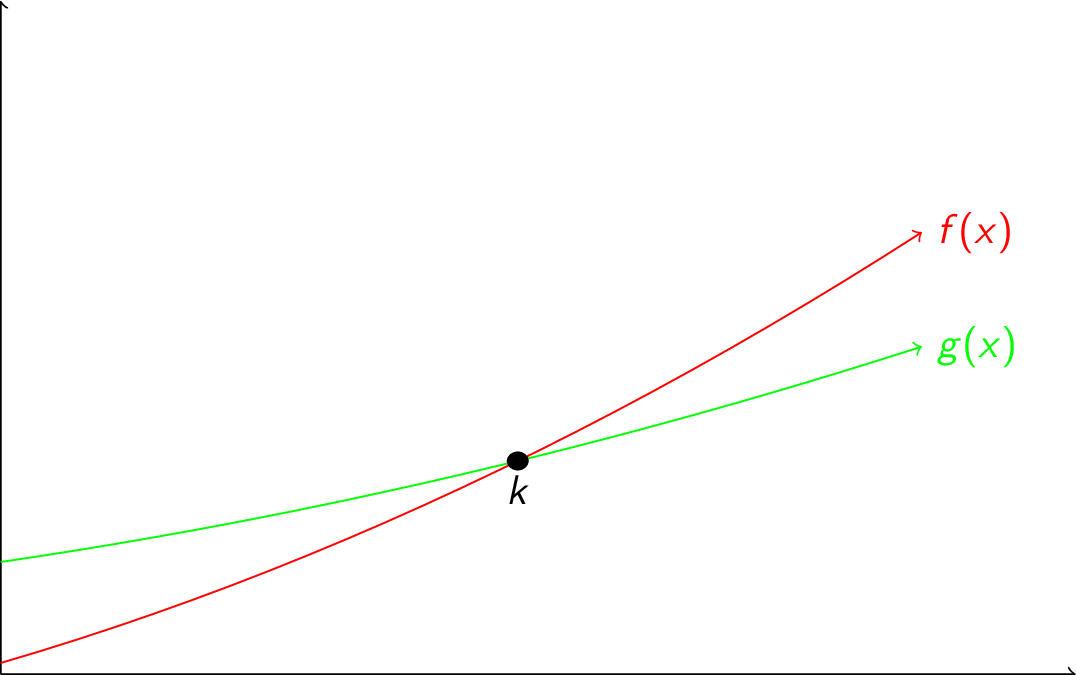

Basically, $f(x)$ is $O(g(x))$ means that, after a certain value of $x$, $f$ is always smaller than some constant multiple of $g$:

Here are some examples that use big-Oh notation:

- To show that $5x^2 + 10x + 3$ is $O(x^2)$: $$5x^2 + 10x + 3 \le 5x^2 + 10x^2 + 3x^2 = 18x^2$$ Each of the above steps is true for all $x \ge 1$, so take $c = 18$, $k=1$ as witnesses.

- To show that $5x^2 - 10x + 3$ is $O(x^2)$: $$5x^2 - 10x + 3 \le 5x^2 + 3 \le 5x^2 + 3x^2 = 8x^2$$ The first step is true as long as $10x > 0$ (which is the same as $x > 0$) and the second step is true as long as $x \ge 1$, so take $c=8$, $k=1$ as witnesses.

-

Is it true that $x^3$ is $O(x^2)$?

Suppose it is true. Then $x^3 \le cx^2$ for $x > k$. Dividing through by $x^2$, we get that $x \le c$. This says that "$x$ is always less than a constant", but this is not true: a line with positive slope is not bounded from above by any constant! Therefore, $x^3$ is not $O(x^2)$.

Typically, we want the function inside the Oh to be as small and simple as possible. Even though it is true, for example, that $5x^2+10x+3$ is $O(x^3)$, this is not terribly informative. Similarly, $5x^2+10x+3$ is $O(2x^2 + 11x + 3)$, but this is not particularly useful.

Here are some important big-Oh results:

-

If $f(x) = a_nx^n + a_{n-1}x^{n-1} + \cdots + a_1x + a_0$ where $a_n \gt 0$, then $f(x)$ is $O(x^n)$.

Proof: If $x>1$, then: \begin{array}{rcl} f(x) &= & a_nx^n + a_{n-1}x^{n-1} + \cdots + a_1x + a_0 \\ &\le & |a_n|x^n + |a_{n-1}|x^{n-1} + \cdots + |a_1|x + |a_0| \\ &\le & |a_n|x^n + |a_{n-1}|x^n + \cdots + |a_1|x^n + |a_0|x^n \\ &= & x^n(|a_n| + |a_{n-1}| + \cdots + |a_1| + |a_0|) \end{array} Therefore, take $c = |a_n| + |a_{n-1}| + \cdots + |a_1| + |a_0| \gt 0$ and $k = 1$.

- What is the sum of the first $n$ integers? $$1+2+3+\cdots+n \le n+n+n+\cdots+n = n \times n = n^2$$ Take $c=1,k=1$ to see that sum is $O(n^2)$. Notice that this agrees with the formula we derived earlier: $\sum_{i=1}^n i = n(n+1)/2$, which is $O(n^2)$.

- What is the growth of $n!$? \begin{array}{rcl} n! &=& n \times (n-1) \times (n-2) \times \cdots \times 2 \times 1 \\ &=& n \times n \times n \times \cdots n \\ &=& n^n \\ \end{array} Therefore, $n!$ is $O(n^n)$ with $c=k=1$

-

What is the growth of $\log n!$?

Take the logarithm of both sides of the previous equation to get $\log n! \le \log n^n$, so $\log n! \le n \log n$. Therefore, $\log n!$ is $O(n \log n)$ with $c=k=1$.

-

How does $\log_2 n$ compare to $n$?

We know that $n \lt 2^n$ (we will prove this later). Taking the logarithm of both sides, we have that $\log_2 n \lt \log_2 2^n = n$. So $\log_2 n$ is $O(n)$ with $c=k=1$.

When using logarithms inside big-Oh notation, the base does not matter. Recall the change-of-base formula: $\log_b n = \frac{\log n}{\log b}$. Therefore, as long as the base $b$ is a constant, it differs from $\log n$ by a constant factor.

Here are some common functions, listed from slowest to fastest growth: $$ O(1), O(\log n), O(n), O(n \log n), O(n^2), O(2^n), O(n!) $$ Caution: there are infinitely many functions between each element of this list!

Big-Omega Notation

As we saw above, big-Oh provides an upper bound for a function. To specify a lower bound, we use big-Omega notation.

Let $f$ and $g$ be functions from $\mathbb{Z} \rightarrow \mathbb{R}$ or $\mathbb{R} \rightarrow \mathbb{R}$. We say $f(x)$ is $\Omega(g(x))$ if there are constants $c \gt 0$ and $k \gt 0$ such that $0 \le c \times g(n) \le f(n)$ for all $x \ge k$. The constants $c$ and $k$ are called witnesses. We read $f(x)$ is $\Omega(g(x))$ as "$f(x)$ is big-Oh of $g(x)$". We write $f(x) \in \Omega(g(x))$ or $f(x) = \Omega(g(x))$ (though the former is more technically correct).

Basically, $f(x)$ is $\Omega(g(x))$ means that, after a certain value of $x$, $f$ is always bigger than some constant multiple of $g$:

Here are some examples that use big-Omega notation:

- To show that $5x^3 + 3x^2 + 2$ is $\Omega(x^3)$: $$5x^3 + 3x^2 + 2 \ge 5x^3 \ge x^3$$ Therefore, take $c=k=1$.

- To show that $x^2 -3x + 4$ is $\Omega(x^2)$: \begin{array}{rcl} x^2 - 3x + 4 &=& \frac{1}{2}x^2 + \frac{1}{2}x^2 - 3x + 4 \\ &=& \frac{1}{2}x^2 + \left( \frac{1}{2}x^2 - 3x + 4 \right) \\ &\ge& \frac{1}{2}x^2 \end{array} The last step is true as long as $\frac{1}{2}x^2 - 3x + 4 \ge 0$, which is true when $x \gt 6$. Therefore, take $c = 1/2, k=6$.

-

Is it true that $3x+1$ is $\Omega(x^2)$?

Suppose it is true. Then $3x+1 \ge cx^2$ for $x \gt k$. Dividing through by $x^2$, we get that $3/x + 1/x^2 \ge c$. Notice that as $x$ gets bigger, the left hand side gets smaller, so this cannot be true. Therefore, $3x+1$ is not $\Omega(x^2)$.

- What is the sum of the first $n$ integers? \begin{array}{rcl} && 1+2+3+\cdots+n \\ &\ge& \left\lceil \frac{n}{2} \right\rceil + \left(\left\lceil \frac{n}{2} \right\rceil + 1\right) + \left(\left\lceil \frac{n}{2} \right\rceil + 2\right) + \cdots + n \\ &\ge& \left\lceil \frac{n}{2} \right\rceil + \left\lceil \frac{n}{2} \right\rceil + \cdots + \left\lceil \frac{n}{2} \right\rceil \\ &=& (n-\left\lceil \frac{n}{2} \right\rceil+1)\left\lceil \frac{n}{2} \right\rceil \\ &\ge& \left(\frac{n}{2}\right)\left(\frac{n}{2}\right) \\ &=& \frac{n^2}{4} \end{array} Therefore, take $c=1/4,k=1$ to show that the sum is $\Omega(n^2)$ (which matches with our formula for this sum).

Big-Theta Notation

In the previous example, we showed that $\sum_{i=1}^n i = \Omega(n^2)$. Earlier, we also showed that this sum is $O(n^2)$. We have special notation for such situations:

Let $f$ and $g$ be functions from $\mathbb{Z} \rightarrow \mathbb{R}$ or $\mathbb{R} \rightarrow \mathbb{R}$. We say $f(x)$ is $\Theta(g(x))$ if $f(x)$ is $O(g(x))$ and $f(x)$ is $\Omega(g(x))$. We read $f(x)$ is $\Theta(g(x))$ as "$f(x)$ is big-Theta of $g(x)$". We write $f(x) \in \Theta(g(x))$ or $f(x) = \Theta(g(x))$ (though the former is more technically correct).

It might be helpful to think of big-Oh/Omega/Theta as follows:

- $\le$ is to numbers as big-Oh is to functions

- $\ge$ is to numbers as big-Omega is to functions

- $=$ is to numbers as big-Theta is to functions